that’s how.

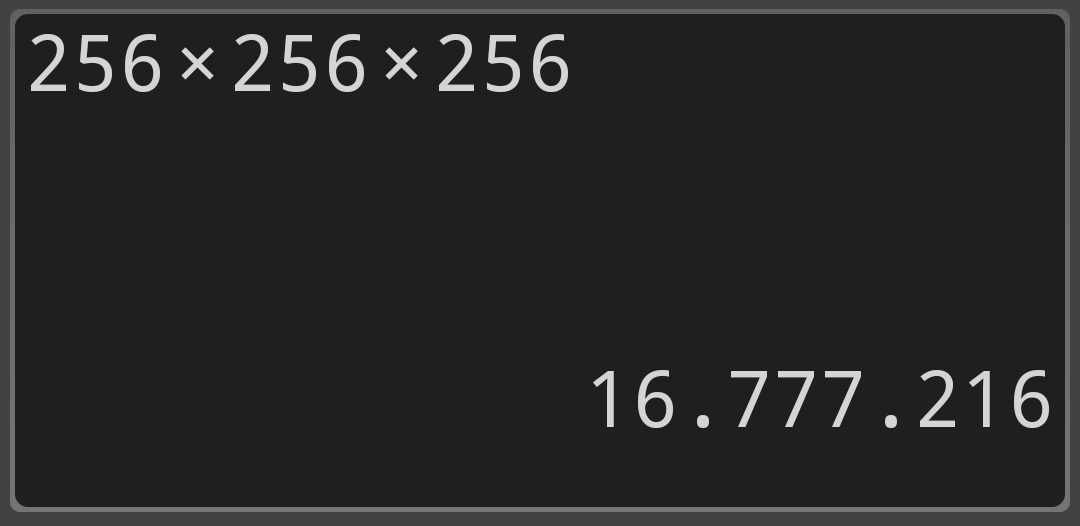

one of the 3 LEDs can have 256 levels of brightness (off included)

take that to the power of three, and you have 16 million colours.

but no mortal can actually tell the difference between 255, 255, 255 and 255, 254, 255.

but no mortal can actually tell the difference between 255, 255, 255 and 255, 254, 255.

Maybe YOU can’t, but don’t speak for the rest of us 😤

Next you’re going to tell me the human eye is capable of differentiating fps above 30

Nah, that’s crazy, it only goes up to a crispy 24 fps. Everyone knows that.

but no mortal can actually tell the difference between 255, 255, 255 and 255, 254, 255.

https://en.wikipedia.org/wiki/Colour_banding

You can see some slight shifts even at 24-bit depth, if side-by-side. It produces a faint-but-visible banding.

Here’s an example (suggesting use of dithering to obscure it):

I play with the color slider in so many games, I can totally tell the difference. It’s 1 less green.

Yeah, essentially the same sourcery behind every pixel of any modern display. The bulb is one pixel.

So… Wait… Does this mean thousands of Hue bulbs can be a display screen? Has this been done?

those really huge displays are often millions of individual RGB LEDs. it would just be a software nightmare to do with hue bulbs.

If they were hardwired yes, but zigbee with millions of bulbs?

I’m guessing you’d hit interference at some point.

But also latency would be bad and you almost definitely couldn’t synchronize them well.

Yeah, I’ve done something similar with ~120 wifi bulbs for a light show that responded to music and that worked fairly well but I doubt it would have worked with more than a few hundred.

Yeah, fair point. It would be no good to have each pixel of an enormous display doing its own processing, and trying to wirelessly command that many lights at once doesn’t seem possible at all.

Don’t underestimate my power!

Was going to say “what a high quality answer”, then I saw you have twice the votes the post has. Well deserved.

And a 4K TV with 10-bit HDR support can show

(2^10 )^3 × 3840 × 2160 = 8,906,044,184,985,600

different images.

The same way your monitor does: https://rgbcolorpicker.com/

They also have white LEDs.

Magic smoke.

Can’t convince me otherwise. Anyone that disagrees is a dirty communist.

I hate when the magic smokes leaks out. Never works right again.

By combining Red, Blue, and Green LEDs.

Each color gets it’s own value and when you combine them all you get a district color value.

Hue bulbs (and any other RGB LED) can display (almost) any color perceptible to the human eye as it combines the three wavelengths of colors our eyes can detect (red, green and blue) and blends them at different brightnesses. The “millions of colors” sell comes from 16-bit color found all over the place in technology. Here’s more info: https://en.m.wikipedia.org/wiki/High_color

16-bit colour gives us around 65000 colours, 24-bit colour gives us the millions mentioned above.

so is it called 24 bit or 8 bit? I feel like most monitors have 8 bit color and the fancy ones have 10, not 24 and 30

It’s just the sum. Monitors have 8bit per color, making for 24bit per pixel, giving the millions mentioned. 16bit is actually 4bit per color and then another 4 for a single of those colors. But this has downsides as explained in the article when going form higher bit depth to lower.

HDR is 10bit per color, and upwards for extreme uses. So it’s sorta true they are 24 or 30 bit, but usually this isn’t how they are described. They normally talk about the bit depth of the individual color.

also 10 bit raw footage, not 30 bit

Same way a printer works, or color blending for that matter, with RGB or CMYK you can make any color. Primary colors and brightness. Greyscales and magentas are extra-spectral.

Another thing that’s curious about these lights which also applies to your computer/smartphone display aswell is the fact that it’s able to produce yellow color despite only having red, green and blue leds in it. If you open up a yellow picture on your monitor and look closely with a magnifying glass there’s no yellow there.

Thats another thing I don’t get. Itf you look at your tv screen real close its all red/green/blue. Every pixel/cell, how does it appear different from far away

Human eyes have three kinds of cells (photoreceptors) for color detection. They each react to either red, green or blue light. If more than one of those cells are activated, your brain interprets the light based on what cells activated, and how strongly they activated. If red and green cells activates, the light is seen as yellow. The light is seen as white if all of them activates fully.

This also means that light bulbs can produce white light by simply producing three wavelengths (colors) of light. The problem with that kind of “fake” white is that colors will look wrong under such light due to the way how objects reflects light. This is very common with low quality LED lights, and even the best smart lights aren’t very good at it. When buying LED lights, you might want to look at the CRI (Color Rendering Index) value and make sure it’s above 90, or as high as possible.

Okay you really want to fuck with your mind, brown is not a color. You can’t not break down a rainbow and find brown anywhere in it. There is no such thing as brown light. Yet you can see it every day.

So how the heck is it rendered?

If I am remembering correctly it is mostly just a crap shade of red.

This is a much better explanation than I can give

Isn’t pink generally the same phenomenon? Something about it being the “absence of green light” rather than its own distinct spot on the visible spectrum.