I thought this video was rather interesting, because at 12:27, the presenter crunches the numbers to find out how many years it would take for a new computer purchase to be more environmentally friendly (in regards to total CO2 expended) compared to using a less efficient used model.

Depending on the specific use case, it could take as little as 3 years to breakeven in terms of CO2 if both systems were at max power draw forever, and as long as 30 if the systems are mostly at idle.

This should be a well know, but often misunderstood thing. Lots of reddit selfhosting threds urge people to buy a new mini-pc for its “low power draw” when usually its the same or 1-2watts less then a laptop from 2012. However performace to watt is much higher, so if you need massive preformance new is much better, if your system is idling most of the time anyway, basically no diffrence in buying old

You just can’t buy too old or the inverse happens and the performance per watt drops. I think you’re right that 2012 is about the cutoff. Maybe 2007 for certain items, like my 2007 iMac. But if you’re getting back to the Pentium 4 era you’ve gone too far and need to turn back around.

Oh god, P4? Yea, those were just 100 watt light bulbs.

My first computer was 33htz. Ran Windows 3.1. And Warcraft 2.

So yeah. The perfect computer.

Well, I would hope for 33 MHz at least… ;-)

A 33MHz DX 486was great. If you got stuck with a slower SX CPU, things were frequently not so hot.

Sans the light

No, the graphics from Intel back in 07-10 were crap. 2012-2013 would be my bare minimum, usb3 if only for loading a new OS.

No, the graphics from Intel back in 07-10 were crap.

What are you using graphics on a server for instead of just CLI?

Transcoding video for streaming.

How much video is really needed for transcoding?

I ask because I need to get a video card for transcoding to a 65" 4k TV. I’m converting all my DVDs to MKV and using Jellyfin as my server and client. It transcodes lighter stuff fine (cartoons, old TV shows), but better movies get some artifacts that don’t occur if I have the TV play the same file from a thumb drive.

I’ve read Jellyfin’s recommendation, but it’s really just “use at least this video chipset”, not a particular card, so I’m trying to determine what card I should get.

Server to TV should be local, why are you transcoding? I watch 4K files on my 4K TV without issues, with Kodi because I don’t need Jellyfin for that.

I use Jellyfin to stream when I’m outside my home, and transcoding 4K is what takes a lot of resources.

It’s transcoding because Jellyfin decided it needs to transcode for some reason, frustratingly. I’ve converted to formats/codecs I know the TV supports, and yet Jellyfin still transcodes, with a message about the TV not supporting the codec (yet if I play the file on the TV from a thumb drive, it works fine with the crappy built-in media player). I’m using the Jellyfin client on the TV because it’s easy to install without a Samsung account, and I don’t think I can get Kodi on it (besides my experience with Kodi is not great, it’s sluggish on real hardware, I can only imagine how bad it would be on an underpowered garbage TV and I don’t know if a client exists).

From a bigger picture perspective, I think Jellyfin as a client will be better for my family. It’s a simpler interface with less to get them in trouble.

I’ll need transcoding for other/non-local devices anyway, so I still have to address the issue (annoying iPad for example).

If you have any advice about troubleshooting why it’s transcoding, I’m all ears. This is the first I’ve gotten Jellyfin to work after multiple attempts over the years, across multiple servers and clients, so my experience with it is limited. I’m just glad it works at all - it’s the first I’ve gotten to work other than Plex.

Thanks - at least now I know it shouldn’t be transcoding.

You don’t really want to live transcode 4K. That’s a tremendous amount of horsepower required to go real time. When you rip your movies you want to make sure they’re in some format that whatever player you’re using can handle. If that means that you use a streaming stick in your TV instead of the app on your TV that’s what you do. I think you could technically do it with a 10th+ gen Intel with embedded video. I know that a Nvidia 2070 super on a 7th gen Intel will not get the job done for an upper and Roku. So all of my 4K video is either h264 or HEVC so it all direct plays on my flavor of Roku.

A better value is just getting 6th gen or newer Intel CPU and using its built-in GPU to do the transcoding. If you want a discrete GPU, any low powered card that supports HEVC should work. Alternatively, you can get something like a Roku device to connect to your TV as they have pretty good compatibility and you’ll avoid transcoding all together.

For my first server, after moving on from 2 raspberrys to a Proxmox host, I went with an embedded Asrock MB, passively cooled so you know it wasn’t drawing much power, still had multiple SATA ports and with the right sticks I could get 32GB RAM in.

Seems better to me than a minipc where you have no expandability, especially no chance for RAID.

I use a 2011 ThinkPad X120e as an FTP/Syncthing server. It was underpowered as a laptop from day one, but still works fine as a lightweight server. The best thing about ThinkPads is that TLP allows you to set min/max charging thresholds, so that you can keep an old battery in good shape for … well, I’ll let you know. This one’s 14 years old and still has a four-hour run time.

One thing I’d like to try is “Wake My Potato” for shutdown / automatic restart when a power outage occurs.

Links:

TLP - https://linrunner.de/tlp/index.html

Wake My Potato - https://github.com/pablogila/WakeMyPotato

@BackYardIncendiary @ProdigalFrog If you have an old latitude, newer kernels also allow you to set min/max charging thresholds. My syncthing server (and NAS and a few other things) is an old 2013/2014 dell latitude e7240. It’s not the original battery, but I do keep it in decent shape via charging thresholds.

Nice to know that the latitudes work with TLP, since it opens up another set of possibilities for the future!

Does that TLP work with proxmox? I was thinking about trying pm with an old Dell latitude I have, but I’m worried about the battery exploding.

I’ve never used Proxmox, so I can’t say. But TLP is a utility that starts at boot. I’ve used it with virtualbox running, so if Proxmox runs atop a host operating system, I don’t imagine that it would interfere with TLP.

As an additional note, I usually set the min/max thresholds at 40/80, so the battery will charge any time it’s plugged in and below 40% and stops charging when it reaches 80%.

Assuming it’s compatible with the hardware at all, it should. You would have to install it on the Proxmox host itself, but Proxmox is basically just Fancy-Debian-for-Virtual-Machine-Hosting and it has Debian packages so that shouldn’t be a problem. Login to the Proxmox itself and install it there.

Yeah, this is why I reuse my old PC parts. Here’s my rough history:

- Built PC w/ old AM3 board for personal use

- Upgraded to AM4, used AM3 build for NAS (just bought drives)

- Upgraded CPU and mobo (wanted mini-ITX), and upgraded NAS to AM5 (did need some RAM)

My NAS power draw was cut in half from 2-3, and it’ll probably be cut again when I upgrade my PC again.

Old PC parts FTW!

I am currently building a home server, this project timeline has been extended as I had no idea hard drives would be THAT expensive at the capacities I want…

I do have an old computer that is not in use, but I don’t want to run a Bulldozer plattform…

So I am basing my new server on the AMD Ryzen 4600G, should be fine

Check serverpartdeals.com for HDDs. They’re used enterprise drives so they’re much cheaper, but there’s always the possibility of getting a bad drive so they should be tested first. If you’re just storing pirated stuff the risk isn’t super great since you can just find the files again. The next best option is shucking external drives like WD Elements/Easystore/MyBook as they’re typically half the price of the bare WD Red drives and are virtually the same thing with a different label. I have bought about 15 drives using both these methods and haven’t had any issues. The shucked drives have been in use since as far back as 2018.

I am a hobby photographer and usually take a few hundred GBs of photos every year (I shoot in JPG+RAW), I have other media as well, but I am mainly concerned about my photos.

I have them currently saved to a single HDD in my computer, which has worked ok, but I have seen bitrot in some files…

So I was reliable storage, currently thinking of a zraid1 with four normal disks, one parity, one hot spare and one cold spare, I am looking for it to last a minimum of ten years with normal maintenance.

I will probably put 64GB ram in the server, and possibly an SSD cache over time

I love this community, I used to rant about efficiency all the the time on reddits self hosting community and everyone thought I was insane. If the damn thing is going to run 24/7 for 5+ years then put a little thought into its power usage!

I personally love old Dell optiplex micros on eBay. Cheap, plentiful laptop hardware in a cute little box which allows modest upgrades. My primary server in a full sized case is just a laptop CPU, Ryzen 5600g. It brings me joy that my network has four servers and still is under 75w idle for everything including networking gear.

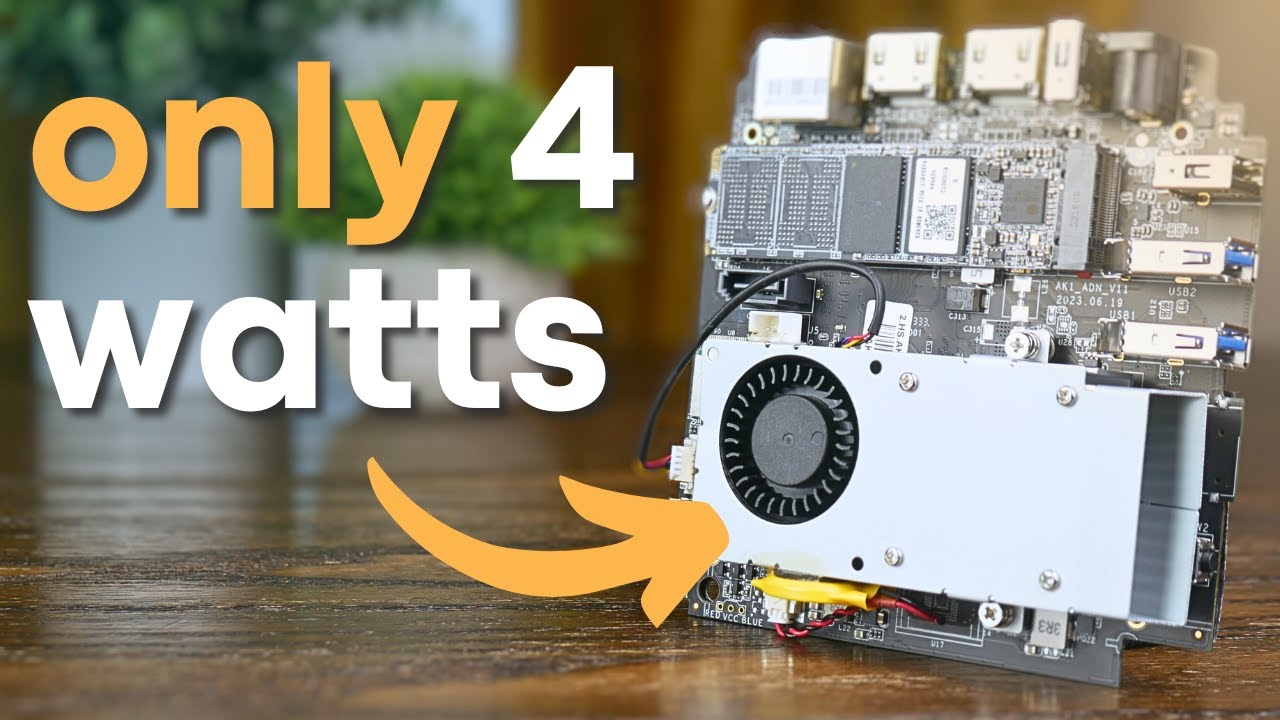

Yep, I moved my server off of my main PC and onto an N305 board. Now my entire server draws less power under load than my PC at idle.

I have an i7-2600 prebuilt for my NAS— is idle most of the time, bought it for $100 4 years ago. Have pretty cheap internet at like $0.12 per KWh, but again mostly idle so probably doesn’t cost much anyway.

I did lol at cheap kwh internet - sounds way better than talking energy costs

Lmao typo

Older desktops can have a somewhat hefty idle power draw due to the overall system consumption contributing more than expected, such as the southbridge. According to this old review of the i7-2600k, the system idles at 74w, which at $0.12 per KWh, would cost you roughly $77 per year. Though you might want to confirm that with a Kill-a-watt meter if you can (libraries sometimes lend them out), since I’m pretty sure that total system power chart includes a discrete GPU, so the real number for a GPU-less system is probably around 40 or 50w at idle.

If that is accurate, you could potentially replace your i7-2600 with a used Dell Wyse 5070 thin client from ebay for about $40 (in the US), and that idles at 5w, which would only cost you $5 a year at the same rate.

Older thin clients and laptops tend to have much better idle power draws compared to desktops. For other people reading this, if you’re using a desktop for a low-power use case, it’s probably worth finding out what its idle power consumption is and doing the calculation to determine if it’d be worth replacing it with a more efficient used thin-client or office mini-pc.

In Germany consumer power is something like 0.4 EUR/kWh, so economics of running power-hungry hardware might be different. Solar PV might change the equation once again.

I have a 4-node heater here, but only 2 nodes are in use currently. I got it because it was exceedingly cheap (£75 here in UK and all 4 nodes have 3xE5-2620s and 48 gig ram) but in reality it overkill. Tempted to make a solar powered rpi 5 + m.2 server with battery backup just because I can, but it will be for serving websites both static and wordpress

I’m wanting something mini at home, I’ve been looking at the gmktec g9 for its nvme slots

I’ve had great luck using Intel NUCs as home servers and HTPC boxes. Since those are now gone, I have found that Beelink is the most cost effective replacement when I needed to revamp the setup. My biggest complaint on them is that the cooling fans on them are not super reliable and it is not easy to find compatible replacements. I had to order them direct from China and there are a few wiring incompatible variants. I ended up with one of them being the wrong type and I had to resolder the leads to match the existing broken fan.

It really depends on what you’re doing with it and on what old PCs you have available.

I have an N100 Mini-PC at home in my living room connected to my TV which is both a home server and a TV-Box using Kodi (I even have a remote for it).

Having modern image and video decoding in hardware is pretty useful when I’m using it as a TV Box (there is zero stutter with it), whilst the rest of the time the thing mostly sits doing some low CPU-intensive server tasks (mainly torrenting and SMB server stuff).

Also, it’s a small box that fits fine on my TV stand without standing out and runs silent pretty almost all of the time.

Further, I don’t have any low power consuming old PCs around - the best are some chunky old notebooks, the rest are old gaming PCs which eat more power idle than the mini PC does at full load - and even the notebooks aren’t that low power as all that.

Mind you, for many years I used an old Asus EEE PC (a very small notebook running Linux) as home file server (with external HDs) and had a separated dedicated hardware TV Media Server box playing files from it, but eventually that PC stopped working and I found out I could just use my Router as a file server.

Last but not least, judging for how long I kept using my TV Media Server boxes (which over almost 2 decades I had 2 different ones and which as dedicated hardware could not easilly be upgraded when new video compression standards came out) 10+ years is definitelly my time-frame for using that Mini-PC.

All this to say that you should consider using old hardware, especially if you have some around and it’s task appropriate (like I did before using an old Asus EEE PC as a home file server), but also take in account what you’re going to do it and consider if new hardware won’t be better over the timespan you will likely be using it and if the being able to get a more task appropriate form factor (like how having a little box-size Mini PC lets me have it in my living room on a TV stand next to my TV and my fiber router) is worth it.

In summary, before you get hardware you should ponder a bit about what you intend to do with it before you decide what to get, don’t be afraid of using stuff you already have and also don’t be afraid to get new stuff if it’s actually justified by hardnosed reasons rather than merely some variant of the “new stuff smell” psychological effect when buying new.

I have an N100 Mini-PC at home in my living room connected to my TV which is both a home server and a TV-Box using Kodi (I even have a remote for it).

That’s exactly the setup I want, but I’m a little lost. Any good guides you can suggest?

I know generally Linux well enough, but I know fuck all about docker

Kodi is a graphical app, like Firefox, so you won’t use docker for it.

It’s the other half of the home server that’s giving me docker trouble, the *arr stack; I hadn’t actually gotten to the stage of trying to set Kodi up yet

What problem are you having? Docker is very straightforward, just copy the compose file and run a command.

Kodi install instructions are here

I don’t use docker, I use lubuntu with normal packages. So for example Kodi is just installed from the Team Kodi PPA repository (which, granted, is outdated, but it works fine and I don’t need the latest and greatest) and just set it up to be auto-started when X starts so that on the TV it’s as if Kodi is the interface of that machine.

Qbittorrent is just the server only package (qbittorrent-nox) which I control remotelly via its web interface and the rest is normal stuff like Samba.

After the inital set up, the actual linux management can be done remotelly via ssh.

That said, LibreELEC is a Linux distro which comes with Kodi built-in (it’s basically Kodi and just enough Linux to run it), so assuming it’s possible to install more stuff in it might be better - I only found out about it when I had my setup running so never got around to try it. LibreELEC can even work in weaker hardware such as a Raspberry Pi or some of its clones.

Also you can get Kodi as a Flatpak which works out of the box in various Linux distros so if you need the latest and greatest Kodi plus a full-blown Linux distro for other stuff you might do the choice of distro based on supporting flatpack and being reasonably lightweight (I actually originally went for Lubuntu exactly because it uses a lightweight Window Manager and I expected that N100 mini-pc to need it, though in practice the hardward can probably run a lot more heavy stuff than that, though lighter stuff means the CPU load seldom goes up significativelly hence the fan seldom turns on and so the thing is quiet most of the time and you only hear the fan spinning up and then down again once in a while even in the Summer).

As for docker, there are a lot of instructions out there on how to install Kodi with Dockers, but I never tried it.

Also you might want to get a remote like this, which is a wireless remote with a USB adapter, not because of the air-mouse thing (frankly, I never use it) but simply because the buttons are mapped to exactly the shortcuts that Kodi uses, so using it with Kodi in Linux is just like using a dedicated remote for a TV Media Box - in fact all those thinks are keyboard shortcuts (that remote just sends keypresses to the PC when you press a button) and they keyboard shortcuts for media players seem to be a standard.

One advantage of a raspberry pi is the possibility of using the TV remote to control Kodi due to HDMI CEC compatibility.

Well, the N100 does have a lot more breathing space in terms of computing power, so it’s maybe a better bet for something you want to use for a decade or more, and that remote control I linked to above does work fine, except for the power button (which will power your Linux off but won’t power it back on).

I actually tried an Android TV Box (which is really just and SBC in the same range of processing power as the Pi) for this before going for the Mini PC and it was simply not as smooth operating.

That Mini-PC has enough computing power room (plus the right processing extensions) that I can be torrenting over OpenVPN on a 1Gb/s connection whilst watching a video from a local file and it’s not at all noticeable on the video playback.

What are you using for storage?

External 2.5" HDDs connected via USB for longer term bulk storage and using it as a NAS, a smaller internal NVME SSD for the OS and a larger one (but SATA, so slower) for the directory were torrents go to.

The different drive performances fit my usage pattern just fine whilst optimizing price per GB.

External 3.5" would be cheaper for bulk storage but the 2.5" are a leftover from when I was more constrained in terms of physical space.

breakeven

Not a word, my dude.