I gotta give LibreElec a 👍. If your tv can do HDMI control, then using the remote to control Kodi works by default.

Jellyfish plugin works incredibly easily too. YouTube, not so much. Google API is not up to spec.

I would like to put in something that I over heard about the pi5, it apparently can run Android tv. Which would solve all your requirements.

Hypervisor Gotta say, I personally like a rather niche product. I love Apache Cloudstack.

Apache Cloudstack is actually meant for companies providing VMs and K8S clusters to other companies. However, I’ve set it up for myself in my lab accessible only over VPN.

What I like best about it is that it is meant to be deployed via Terraform and cloud init. Since I’m actively pushing myself into that area and seeking a role in DevOps, it fits me quite well.

Standing up a K8S cluster on it is incredibly easy. Basically it is all done with cloud init, though that process is quite automated. In fact, it took me 15m to stand up a 25 node cluster with 5 control nodes and 20 worker nodes.

Let’s compare it to other hypervisors though. Well, Cloudstack is meant to handle global operations. Typically, Cloudstack is split into regions, then into zones, then into pods, then into clusters, and finally into hosts. Let’s just say that it gets very very large if you need it to. Only it’s free. Basically, if you have your own hardware, it is more similar to Azure or AWS, then to VMWare. And none of that even costs any licensing.

Technically speaking, Cloudstack Management is capable of handling a number of different hypervisors if you would like it to. I believe that includes VMWare, KVM, Hyperv, Ovm, lxc, and XenServer. I think it is interesting because even if you choose to use another hypervisor that you prefer, it will still work. This is mostly meant as a transition to KVM, but should still work though I haven’t tested it.

I have however tested it with Ceph for storage and it does work. Perhaps doing that is slightly more annoying than with proxmox. But you can actually create a number of different types of storage if you wanted to take the cloud provider route, HDD vs SSD.

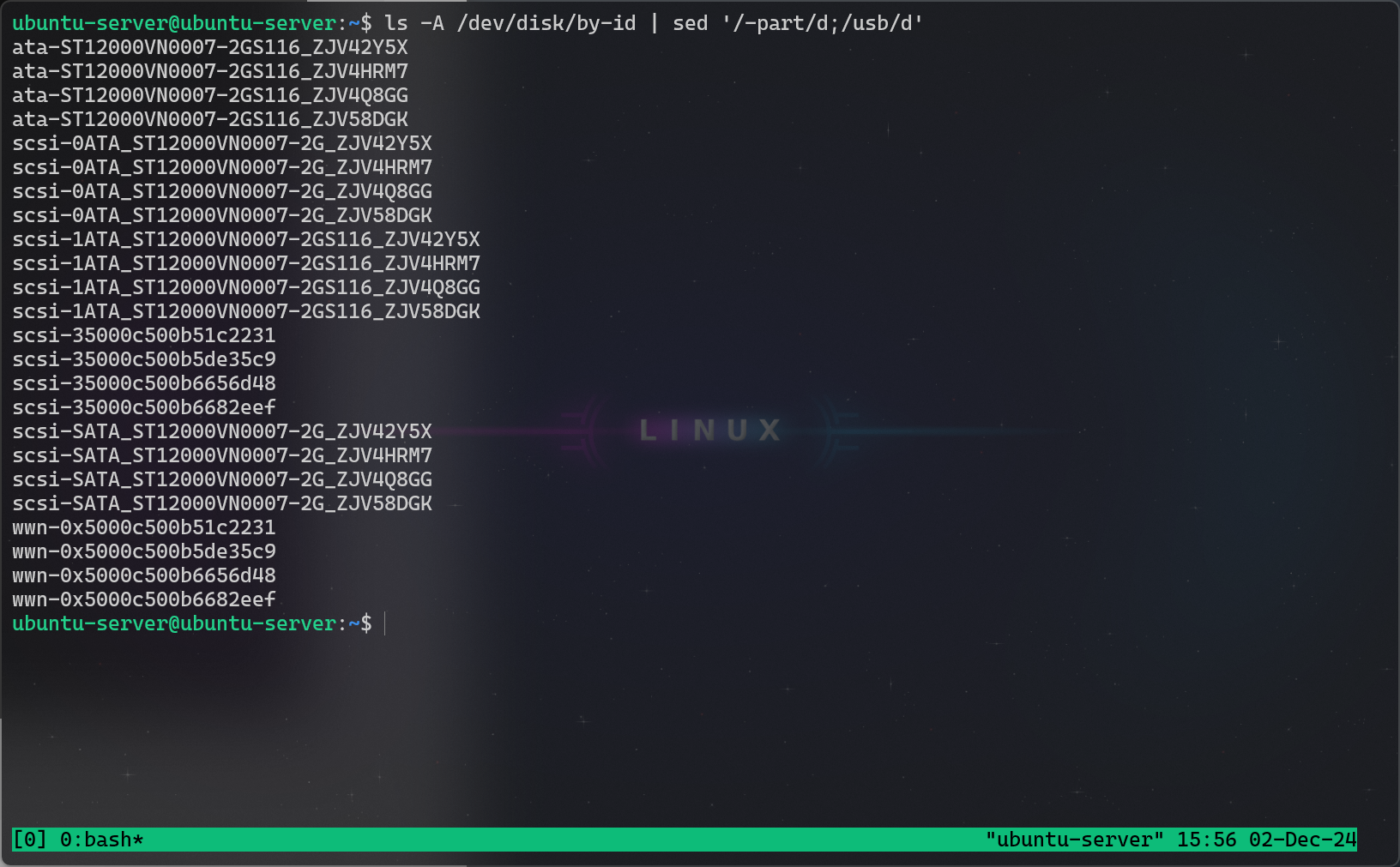

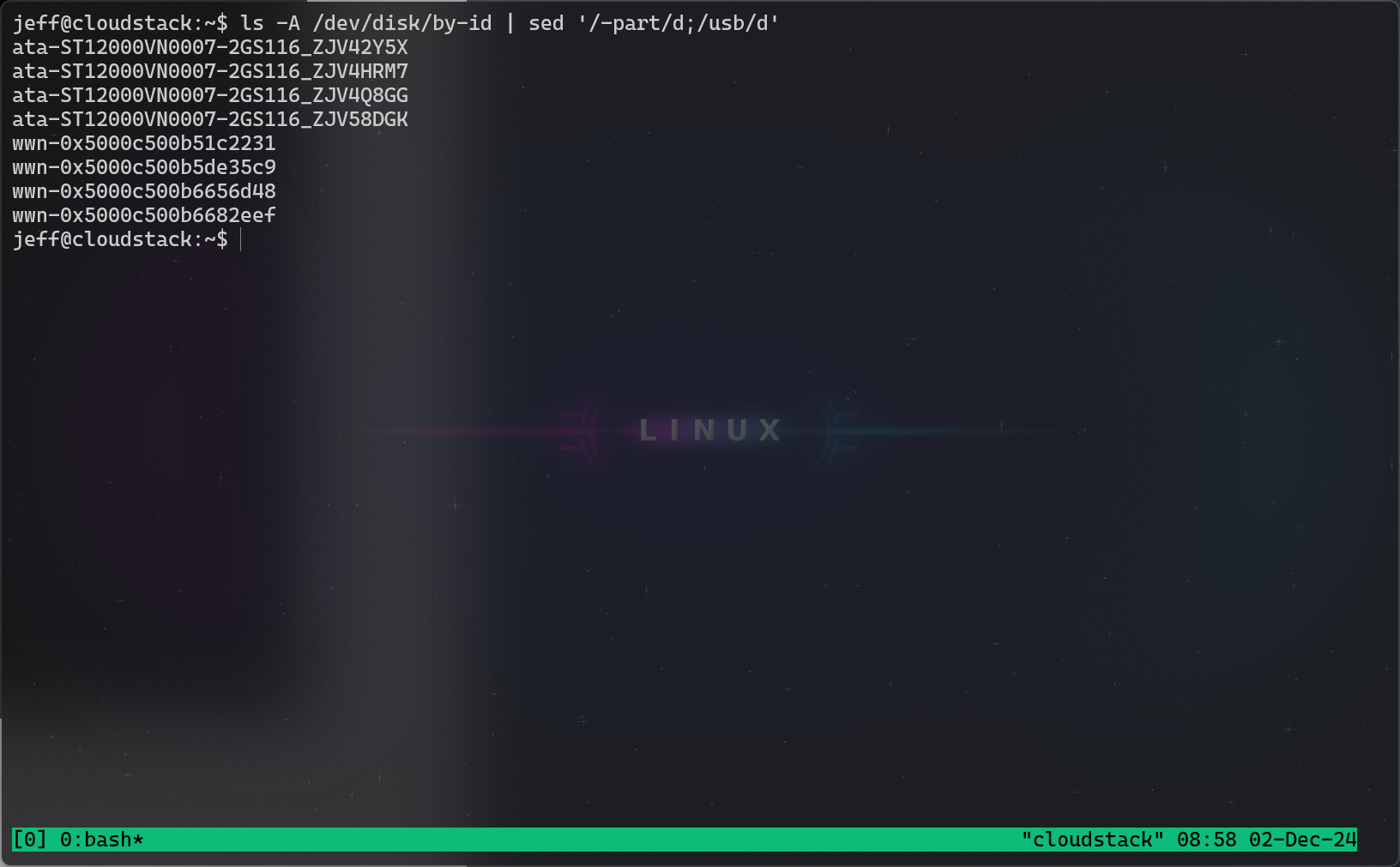

Overall, I like it because it works well for IaaS. I have 2000 vlans primed for use with its virtual networking. I have 1 host currently joined, but a second host in line for setup.

Here is the article I used to get it initially setup, though I will admit that I personally used a different vlan for the management ip and the public ip vlan. http://rohityadav.cloud/blog/cloudstack-kvm/