Better than anything. I run through vulkan on lm studio because rocm on my rx 5600xt is a heavy pain

I like sysadmin, scripting, manga and football.

Better than anything. I run through vulkan on lm studio because rocm on my rx 5600xt is a heavy pain

Ollama has had for a while an issue opened abou the vulkan backend but sadly it doesn’t seem to be going anywhere.

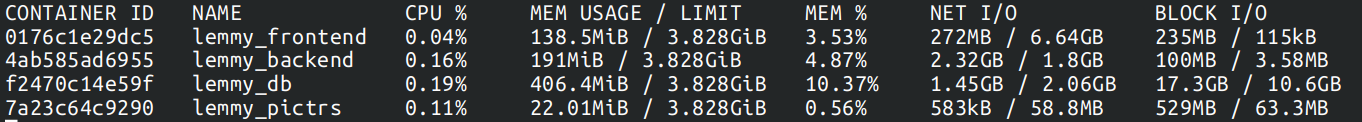

Put up some docker stats in a reply in case you missed it on the refresh

For the whole stack in the past 16 hours

# docker-compose stats

Depends on how many communities do you subscribe too and how much activity they have.

I’m running my single user instance subscribed to 20 communities on a 2c/4g vps who also hosts my matrix server and a bunch of other stuff and right now I mostly see peaks from 5/10% of CPU and RAM at 1.5GB

I have been running for 15months and the docker volumes total 1.2GBs A single pg_dump for the lemmy database in plain text is 450M

From what I understand its still restrained by the server in real time so downloading a 2 hour movie would still take two hours 🥲

Yeah, I have been eyeing upgrades to get avx512 anyway because lately I have been doing very heave very low preset av1 encodes but when they are a dick about it I just feel like postponing it.

lol for the past 15 years I have “rebuilt” my desktop every 5 years but I didn’t expect the would try to force me out of my 7 3700x right on the date

Is it for security? I think is mostly recommended because your home router is likely to have a dynamic address.

This is in regards to opening a port for WG vs a tunnel to a VPS. Of course directly exposing nginx on your router is bad.

remnant, partially because it’s a frankestein of second hand from wallapop and dusted pieces from my old computers, partially as a weeb reference to the world of RWBY lol

lol same I like to know exactly where the data is

I do this always for any service but also do a dump of the db in the top directory to keep a clean copy to could be version independent. They wont take much more space ho estly

It doesn’t cover permissions unless you are willing to setup http auth on your webserver but I really enjoy mdbooks. I looks clean and still is just markdown.

I guess that’s fair for single service composes but I don’t really trust composes with multiple services to gracefully handle only recreating one of the containers

I do it that way. Enable email notifications for new tagged releases, something arrives, check changelog, everything fine?

docker-compose pull; docker-compose down; docker-compose up -d

And we are done

You could not use the dns challenge?

I have no experience outside of blocky, but the configuration file is so damm simple and clean I have troubles even considering anything else.

Daily, usually keeping only the last week or so

If you keep the same filenames for the video files it should not redownload what already has.

For automatically I think is honestly easier to just run the command on a cronjob every 5 mins.

I have a lot of artworks I downloaded over years that were saved in png files and after converting them loslessly to avif I still was able to regain some space.

For videos you cant afford lossless if you want to recover space but visually lossless results are usually good enough on AV1